An Introduction

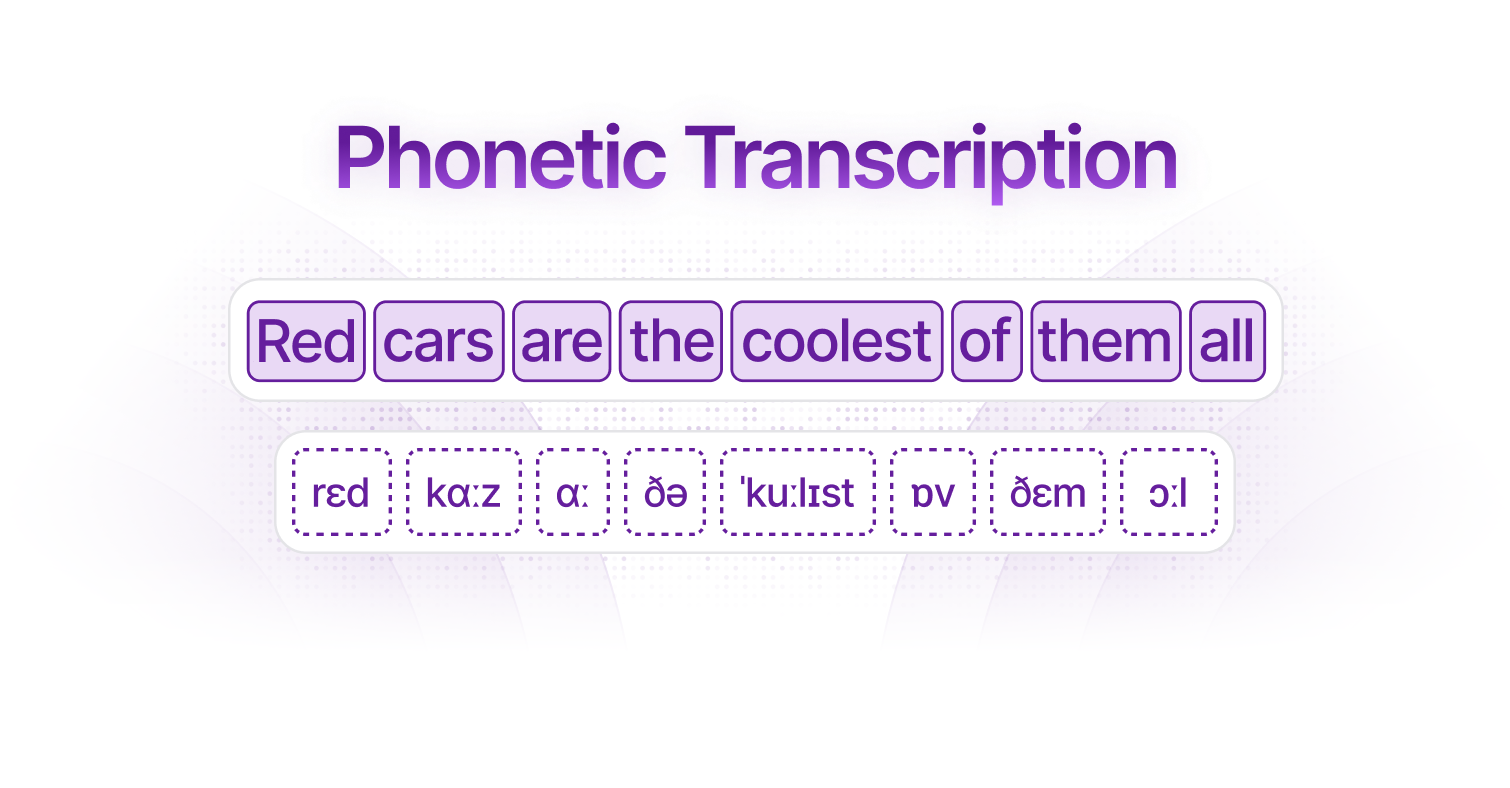

The International Phonetic Alphabet (IPA) is like the Swiss Army knife of pronunciation—it gives us precise symbols to represent every sound humans make in language. In recent years, predicting these phonemic transcriptions from audio has become a popular machine learning task. But how do we calculate the accuracy of these models?

At Koel Labs, we use two key metrics to evaluate phonemic transcription models:

- Phonemic Error Rate (PER): The classic "how many mistakes did you make?" metric

- Weighted Phonemic Edit Distance (WPED): A smarter approach that considers how similar sounds are to each other

Why Traditional Metrics Fall Short: A Tale of Three Words

Let's say we're trying to transcribe the word "Bop". Our model could make different types of mistakes, and this is where things get interesting.

Consider two models making different predictions:

- Model 1 predicts: "Pop"

- Model 2 predicts: "Sop"

From a linguistics perspective, these mistakes are not created equal:

- 'B' and 'P' are like cousins—they're both plosive bilabial consonants, made by stopping airflow with your lips. The only difference is that 'B' is voiced (your vocal cords vibrate) and 'P' isn't.

- 'B' and 'S', on the other hand, are more like distant relatives. 'S' is a fricative alveolar consonant, made by forcing air between your tongue and the ridge behind your upper teeth—a completely different sound!

This is where traditional PER falls short. It calculates errors based on simple substitutions, deletions, and insertions. In our example:

"Bop" → "Pop": 1 substitution = 33.33% error rate

"Bop" → "Sop": 1 substitution = 33.33% error rateThis is like saying someone who almost hit the bullseye did just as poorly as someone who hit the wall next to the dartboard. You can imagine that this would create very misleading evaluations.

Weighted Phonemic Edit Distance

This is where WPED comes to the rescue, powered by the Panphon library. Instead of treating each phoneme as completely different or identical, it represents them as a sequence of features—things like:

- Is it voiced?

- Where in the mouth is it made?

- How is the air released?

Each phoneme becomes a feature vector, something like:

B: [+voiced, +bilabial, +plosive, -fricative, ...]

P: [-voiced, +bilabial, +plosive, -fricative, ...]

S: [-voiced, +alveolar, -plosive, +fricative, ...]When we calculate the distance between these vectors, we get a much more nuanced view:

Distance("Bop" → "Pop") = 0.2 // Small difference

Distance("Bop" → "Sop") = 0.8 // Large difference

Why This Matters

When you're teaching a model to transcribe speech, you want it to understand that predicting a similar sound is better than predicting a completely different one. This is especially important because different models might use different phoneme vocabularies—some might have 40 symbols, others up to 400.

Traditional PER might unfairly favor models that happen to use the exact same phoneme set as your ground truth data, even if other models are making more linguistically sensible predictions. WPED helps level the playing field by considering phonetic similarity.

The Takeaway

By using WPED alongside traditional metrics like PER, we can better understand how well our models are really performing at phonemic transcription. It's not just about getting the exact right symbol—it's about understanding the underlying sounds of language.

As we continue to develop better speech recognition models, metrics like WPED will be crucial in helping us measure progress in a way that actually reflects linguistic reality. After all, in the world of pronunciation, being close sometimes counts for a lot more than traditional metrics might suggest!